A well-optimized website is crucial for attracting organic traffic and achieving your business goals. Technical SEO, the foundation of a strong SEO strategy, ensures search engines can effortlessly crawl, index, and understand your website. By conducting regular technical SEO audits, you can identify and address potential issues that hinder your website’s search engine visibility.

This guide will help you understand how to conduct a technical SEO audit using the latest trends, tools and best practices.

What is Technical SEO?

Technical SEO refers to the process of optimizing your website’s technical aspects to improve its visibility and performance in search engines. This includes enhancing site speed, ensuring mobile-friendliness, securing the site, and making sure search engines can crawl and index your site properly.

Key Elements of Technical SEO:

- Crawlability: Ensuring search engines can access and crawl your site.

- Indexability: Making sure your pages are indexed by search engines.

- Site Speed: Optimizing your website to load quickly.

- Mobile-Friendliness: Ensuring your site works well on mobile devices.

- Security: Implementing HTTPS to protect user data and build trust.

By addressing technical SEO issues, you pave the way for improved search engine visibility, organic traffic growth, and ultimately, better business outcomes.

When to Perform a Technical SEO Audit

Ideally, a technical SEO audit should be performed before launching a new website or making significant changes to an existing one. However, regular audits are essential to maintain optimal website performance. However, consider scheduling a technical SEO audit in the following scenarios:

- Launching a new website: Ensure a solid technical foundation from the start.

- Significant decline in organic traffic: To identify potential technical issues affecting visibility.

- Making significant website changes: Migrations, redesigns, or CMS updates can introduce technical problems.

- Regularly, as part of ongoing SEO efforts: To stay ahead of algorithm updates and maintain website health.

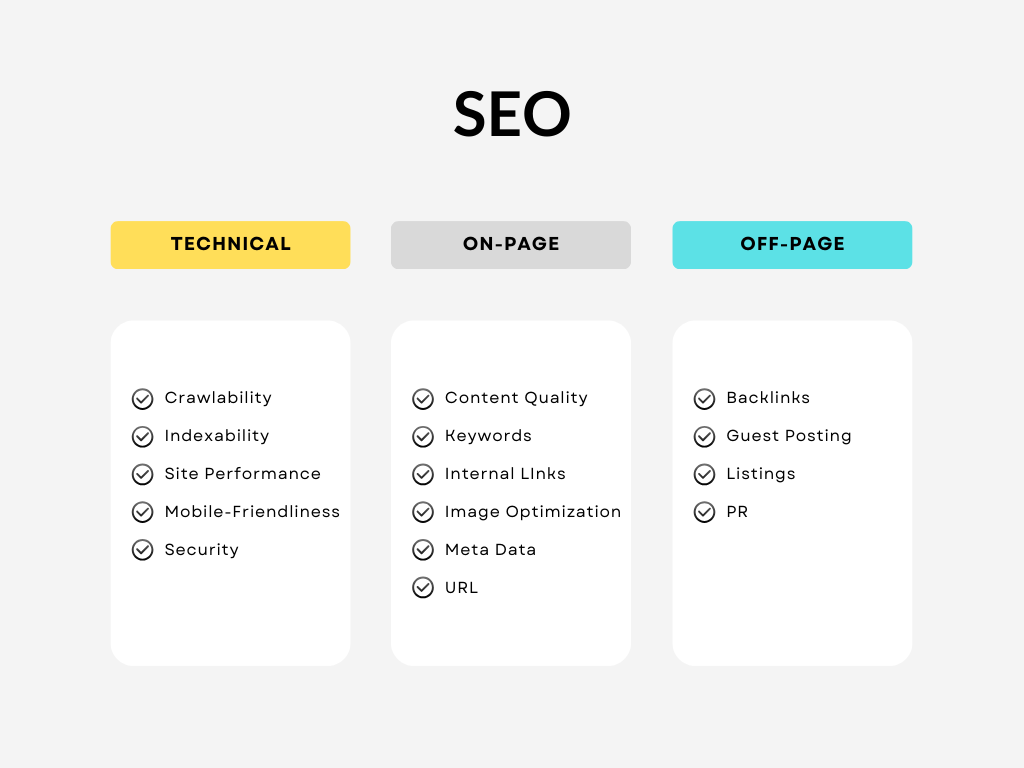

Difference Between Technical, On-Page, and Off-Page SEO Audits

To clarify, let’s differentiate between the three types of SEO audits:

Technical SEO Audit:

- Focus: The website’s infrastructure and backend.

- Elements: Site speed, mobile optimization, security, crawlability, and indexability.

- Tools: Screaming Frog, Google Search Console, GTmetrix.

On-Page SEO Audit:

- Focus: Content and keyword optimization.

- Elements: Meta tags, headers, keyword density, content quality.

- Tools: Screaming Frog, SEMrush, Moz.

Off-Page SEO Audit:

- Focus: External factors affecting SEO.

- Elements: Backlinks, social signals, domain authority.

- Tools: Ahrefs, Majestic, Moz Link Explorer.

A comprehensive SEO strategy incorporates all three types of audits for holistic website optimization.

Tools for SEO Audit

Using the right tools can make your SEO audit more efficient and effective. Here are some essential tools for conducting a technical SEO audit in 2024:

- Screaming Frog: A powerful site crawler that helps identify technical issues. The free version allows you to crawl up to 500 URLs. It includes many basic features such as finding broken links, analyzing page titles and metadata, and generating XML sitemaps.

- Google Search Console: Provides insights into indexing and crawl errors. Google Search Console is a free tool provided by Google. It helps you monitor and maintain your website’s presence in Google search results.

- GTmetrix: Measures site speed and provides optimization suggestions.

- Ahrefs: Offers comprehensive site analysis, including backlinks and keywords.

- Google PageSpeed Insights: Evaluates site speed and performance.

- SEMrush: A versatile tool for SEO, PPC, and content marketing audits.

How to Perform a Technical SEO Audit (Checklist)

Performing a technical SEO audit ensures that your website is optimized for search engines and provides a good user experience. Here’s a simple checklist to guide you through the process:

Let’s see how we can follow this checklist and what to look for while performing a technical SEO audit.

Crawlability:

Crawlability refers to how easily search engine bots (also known as spiders or crawlers) can access and navigate your website. This involves optimizing:

- XML Sitemap

- Robots.txt

- URL Structure

- Internal Linking

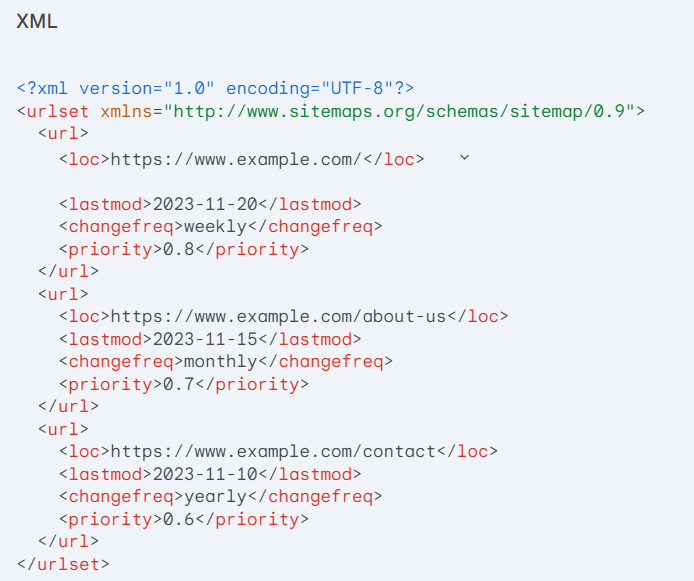

1. XML Sitemap

An XML Sitemap is a file that provides information about the URLs on your website to search engines. A well-structured Sitemap can significantly improve crawling efficiency and indexing. Ensure it’s up-to-date, accessible, and includes important pages.

Tips for an Effective XML Sitemap

- Include All Important Pages: Ensure your Sitemap covers all essential pages, including product pages, category pages, blog posts, and contact pages.

- Exclude Unnecessary Pages: Avoid including pages with low value, duplicate content, or pages with noindex directives.

- Use Clear and Consistent Naming: Create meaningful Sitemap filenames (e.g., sitemap_products.xml).

- Keep It Up-to-Date: Regularly update the sitemap to reflect changes in your site’s structure and content.

- Test and Monitor: Regularly check your Sitemap’s performance using Search Console data.

- Submit Your Sitemap: Submit your XML sitemap to search engines using Google Search Console, Bing Webmaster Tools, or other webmaster tools.

Here’s a simplified example of an XML Sitemap containing a few URLs:

Example Sitemap Structure for a Large E-commerce Website

- Main Sitemap: Includes homepage, category pages, contact, about us, etc.

- Product Sitemap: Contains all product pages.

- Image Sitemap: Lists important images with descriptive information.

- Sitemap Index: Points to the individual sitemaps.

2. Robots.txt

A well-structured robots.txt file is essential for effective search engine crawling. It provides clear instructions to search engine crawlers about which pages they can and cannot access on your website.

Basic Structure:

A robots.txt file consists of two primary directives:

- User-agent: Specifies the search engine crawler or bot to which the following rules apply.

- Disallow: Tells the specified crawler not to access the following URLs.

Example:

User-agent: *

Disallow: /admin/

Disallow: /private/

This example tells all search engines not to crawl pages in the /admin/ and /private/ directories.

Note: To apply directives to all search engine use * in your robots.txt file. If you only want to block a specific search engine from accessing certain pages or folders, use the specific bot for that search engine, like Googlebot for Google or Bingbot for Bing.

Additional Directives:

- Allow: Specifies URLs that can be crawled even if they are part of a disallowed directory.

- Sitemap: Indicates the location of your XML sitemap.

Example with Allow and Sitemap:

User-agent: Googlebot

Disallow: /tmp/

Allow: /tmp/images/

Sitemap: https://www.example.com/sitemap.xml

Example of a More Detailed Robots.txt:

User-agent: Googlebot

Disallow: /admin/

Disallow: /private/

Disallow: /cgi-bin/

Allow: /images/

Sitemap: https://www.example.com/sitemap.xml

User-agent: Bingbot

Disallow: /admin/

Disallow: /private/

Sitemap: https://www.example.com/sitemap.xml

This example shows how you can specify different rules for different search engine crawlers.

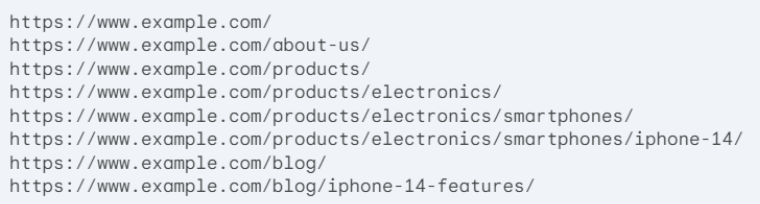

3. URL Structure

A well-structured URL is clear, concise, and informative, helping both users and search engines understand the page content.

Good URL Structure

✔ Descriptive and Keyword-Rich:

Example: example.com/seo-tips-for-beginners

Why: This URL clearly describes the page content and includes relevant keywords, helping search engines understand the topic.

✔ Hyphen-Separated:

Example: example.com/seo-best-practices

Why: Hyphens are used to separate words, making the URL readable and improving keyword relevance.

✔ Consistent Format:

Example: example.com/products/organic-coffee

Why: Using lowercase letters and a consistent format throughout the site avoids confusion and potential duplicate content issues.

Example of a Well-Structured URL Hierarchy

Bad URL Structure

✘ Unclear and Complex:

Example: example.com/index.php?page=category&subid=456&item=789

Why: This URL is cluttered with parameters and doesn’t provide clear information about the page content.

✘ Non-Descriptive:

Example: example.com/xyz123

Why: This URL doesn’t indicate what the page is about, making it difficult for search engines and users to understand its content.

✘ Uppercase Letters:

Example: example.com/SEO-Tips

Why: URLs with uppercase letters can cause issues with duplicate content and may not be as SEO-friendly as lowercase URLs.

4. Internal Linking

Internal linking is essential for search engine crawlability, user experience, and SEO. Check for broken links, orphan pages, and proper anchor text.

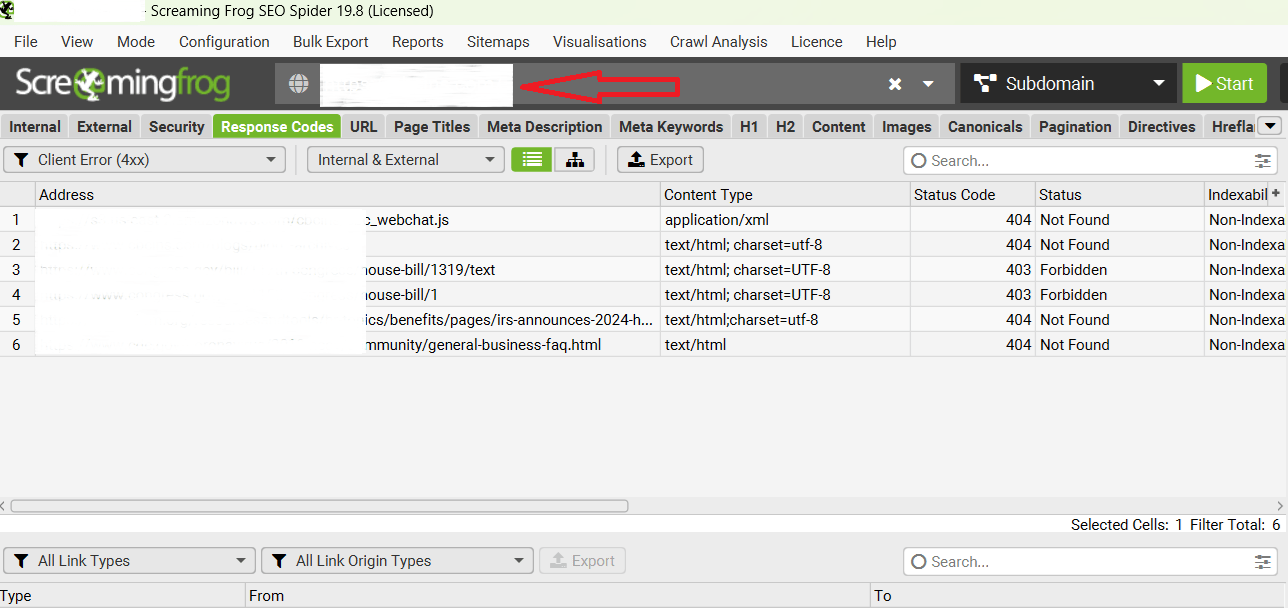

Broken Links

Identify: Use website crawling tools to find broken links.

Redirect: Implement 301 redirects to send users and search engines to the correct page.

Remove: If a page is permanently removed, consider using a 404 page with relevant internal links.

In the screenshot below, you’ll see the Screaming Frog tool in action. To check for 404 pages, enter your URL into Screaming Frog and wait for the data analysis to finish. Once completed, navigate to the response codes section to identify any 404 errors.

Orphan Pages

Identify: Use website analysis tools to find pages with no incoming links.

Link: Create internal links from relevant pages to connect orphan pages to the website structure.

Remove: If the page is irrelevant, consider removing it or redirecting it.

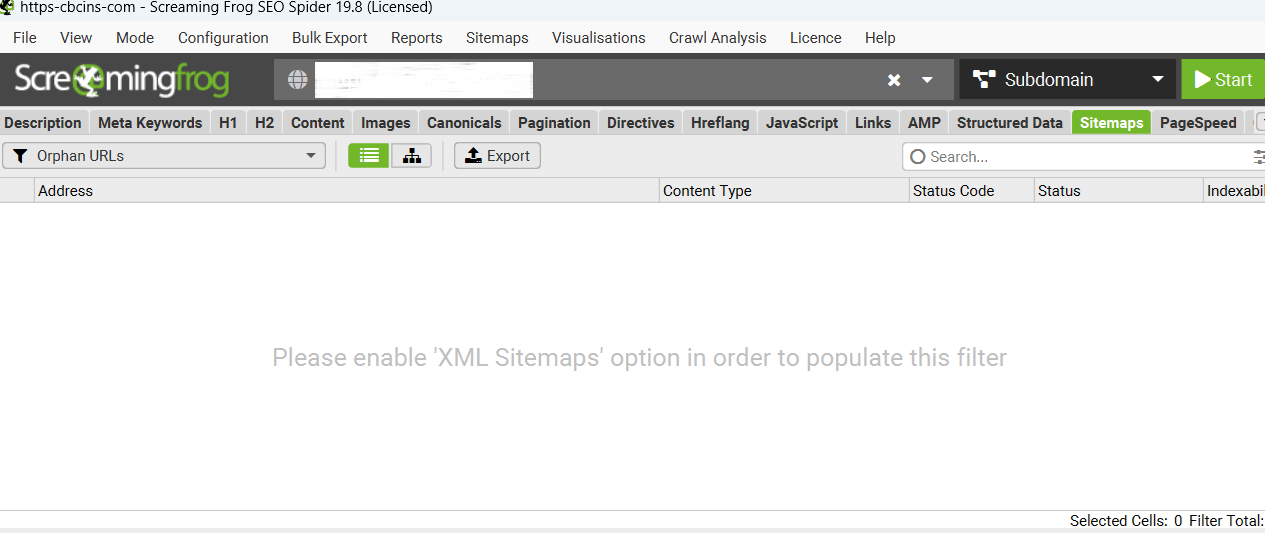

In the screenshot below, you’ll see the Screaming Frog tool in action. To check for orphan pages, enter your URL into Screaming Frog and wait for the data analysis to finish. Once completed, navigate to the sitemaps section to identify any orphan pages.

Proper Anchor Text

Relevance: Use anchor text that accurately describes the linked page’s content.

Keyword Optimization: Incorporate relevant keywords naturally into anchor text.

Diversity: Avoid keyword stuffing and use variations of anchor text.

Indexability

Indexability is the process of search engines storing information about your website’s pages in their index for potential display in search results. Key factors include:

- Search Console Console

- Duplicate Content

- Meta Robots Tags

1. Search Console Console

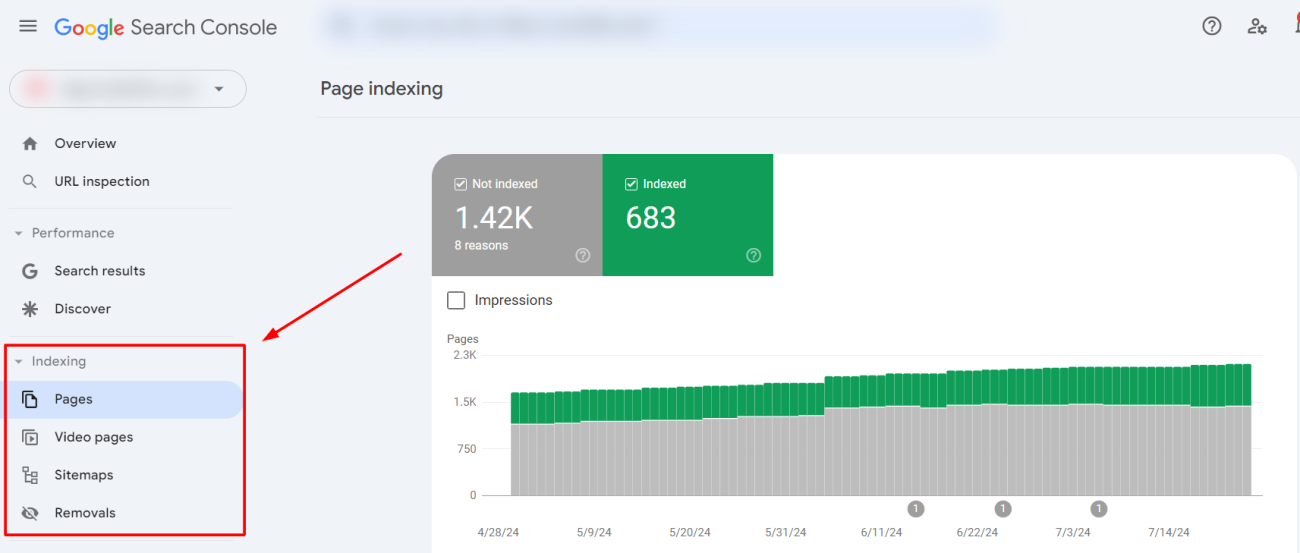

The Indexing section in Google Search Console is crucial for understanding how Google indexes your site and identifying any issues that may affect your site’s visibility in search results. Here’s an overview of the key components within the Indexing section:

- Video Pages: Shows the total number of pages with video content that Google has successfully indexed. Also, lists pages with video content that have indexing issues preventing them from appearing in search results.

- Sitemaps: Allows you to submit your XML sitemaps to Google. This helps Google discover and index your pages more efficiently.

- Removals: Lets you request temporary removal of specific URLs from Google’s search results. Useful for quickly hiding content that is outdated or sensitive.

- Pages: Displays a list of URLs that have been successfully indexed by Google or have encountered issues. To gain a deeper understanding, let’s explore the different areas covered by the Page Indexing section, as shown in the screenshot below:

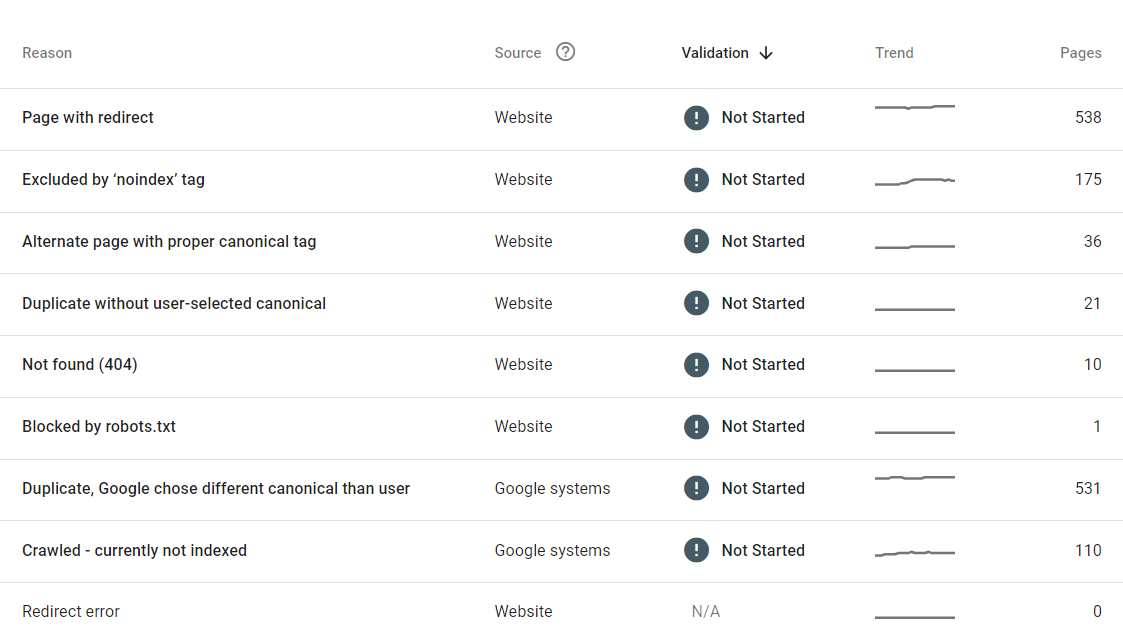

Let’s see what these issues are how to fix them:

Page with Redirect

- Analyze: Check if the redirect is intentional. Use tools like HTTP status code checkers to confirm the type of redirect (301, 302).

- Fix: If unintended, update or remove the redirect. Ensure redirects point to the correct final URL without creating redirect chains.

Excluded by ‘noindex’ Tag

- Analyze: Identify pages with the noindex tag using the URL Inspection Tool.

- Fix: Remove the noindex tag from pages that should be indexed. Update your CMS settings if it’s adding noindex tags automatically.

Alternate Page with Proper Canonical Tag

- Analyze: Ensure the canonical tag is correctly pointing to the primary version of the page.

- Fix: Verify the canonical tag is properly set. Use canonical tags only when necessary and ensure they point to the correct URL to consolidate link equity.

Duplicate without User-Selected Canonical

- Analyze: Identify duplicate pages without canonical tags.

- Fix: Add canonical tags to the duplicate pages, pointing to the preferred version. This helps search engines understand which version to index.

Not Found (404)

- Analyze: Locate broken links and identify pages returning 404 errors.

- Fix: Redirect 404 pages to relevant content using 301 redirects or recreate the missing pages if appropriate. Regularly monitor and update internal links.

Blocked by Robots.txt

- Analyze: Check the robots.txt file for rules blocking important pages.

- Fix: Edit the robots.txt file to remove or update disallow rules that block pages you want indexed. Use robots.txt tester tools for validation.

Duplicate, Google Chose Different Canonical than User

- Analyze: Use the URL Inspection Tool to see which canonical URL Google has selected.

- Fix: Ensure that your preferred canonical URL is correct and not conflicting with other signals (e.g., internal links, sitemaps). Adjust canonical tags if needed.

Crawled – Currently Not Indexed

- Analyze: Identify pages that have been crawled but not indexed. This can be due to low-quality content or crawl budget issues.

- Fix: Improve the quality and relevance of the content. Check for and fix any crawl errors. Ensure the page provides unique value and is linked internally.

2. Duplicate Content

Duplicate content can significantly impact your website’s search engine rankings and indexing. Here’s a step-by-step guide on how to identify and address it:

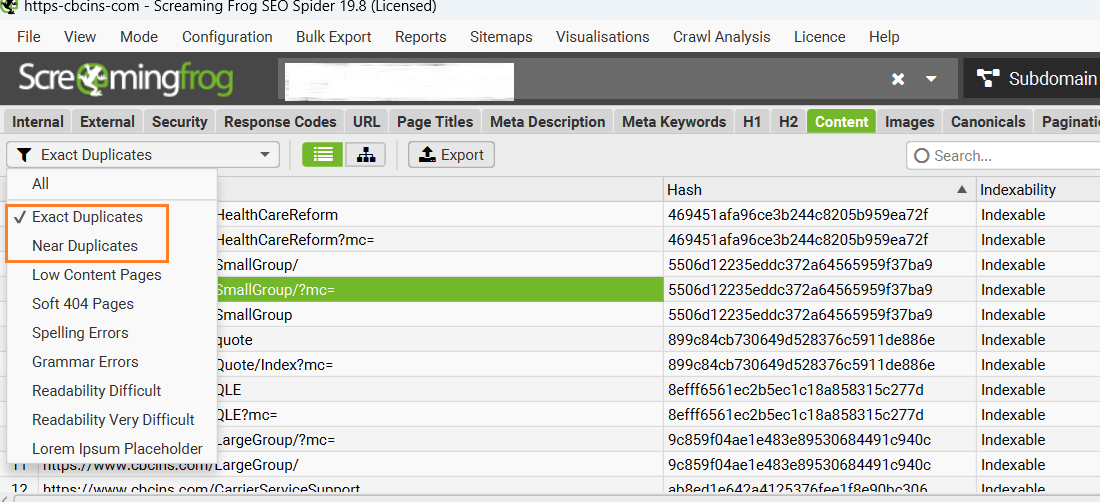

Identifying Duplicate Content: Utilize tools like Google Search Console, Screaming Frog, Siteliner, Copyscape, and SEMrush to scan your website for duplicate content. For example, Screaming Frog crawls your website to find duplicate content based on content similarity. You have to go to the content section and search for exact or new duplicates.

If you are not seeing the result it means either no duplicate content is present on your website or you just have to enable it from the setting. Go to the Crawl Analysis> Configure> Content> Duplicate> Enable Near Duplicate

Also, you can try performing a Google search using snippets of your content in quotes. This can help you find other instances of your content across the web or within your own site. For example, select a paragraph from your website and search on Google like “Your Content”. If you see similar content in search results it means your website has duplicate content.

Fixing Duplicate Content: If you have duplicate content within your website then you can try below steps:

- 301 Redirects: Use 301 redirects to consolidate duplicate pages. Redirect duplicate URLs to the primary page to pass on link equity and traffic to the preferred version.

- Canonical Tags: Implement canonical tags to signal the preferred version of a page. Add <link rel=”canonical” href=”URL”> in the HTML head section of the duplicate pages, pointing to the main page.

If your website contains duplicate content from different websites, it’s essential to create unique and valuable content for each page. Focus on producing high-quality, original content that provides value and distinguishes your pages from one another.

3. Meta Robots Tags

Meta robots tags are crucial for controlling how search engines crawl and index your web pages. Tools like Google Search Console, SEMrush, and Ahrefs can help identify pages with meta robots tags. Check for unintended blocking of pages from indexing.

Site Performance

Site speed refers to how quickly your website loads. Faster load times improve user experience and also positively impact search engine rankings. Factors affecting site speed include:

Image Optimization: Ensure images are compressed and have descriptive alt text.

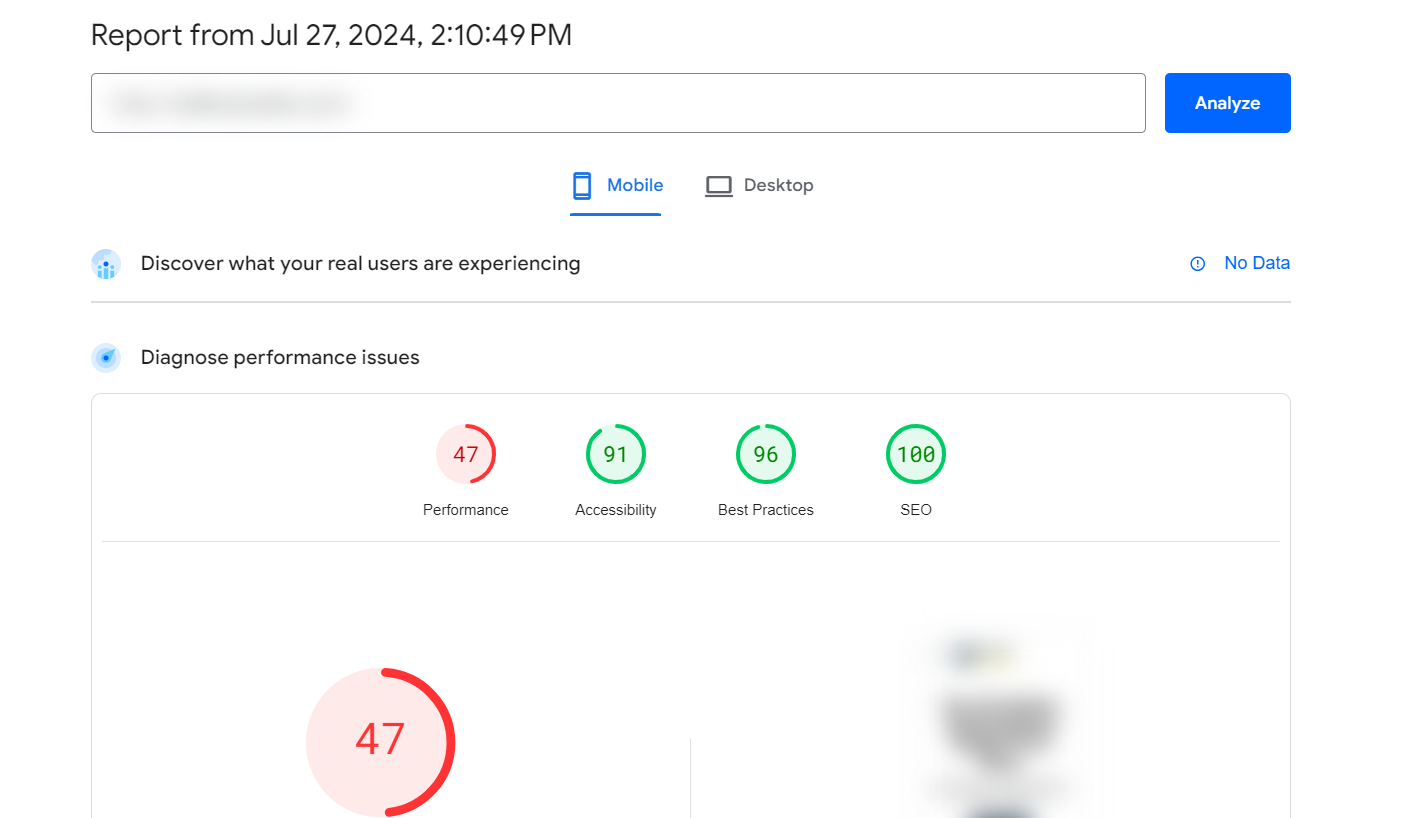

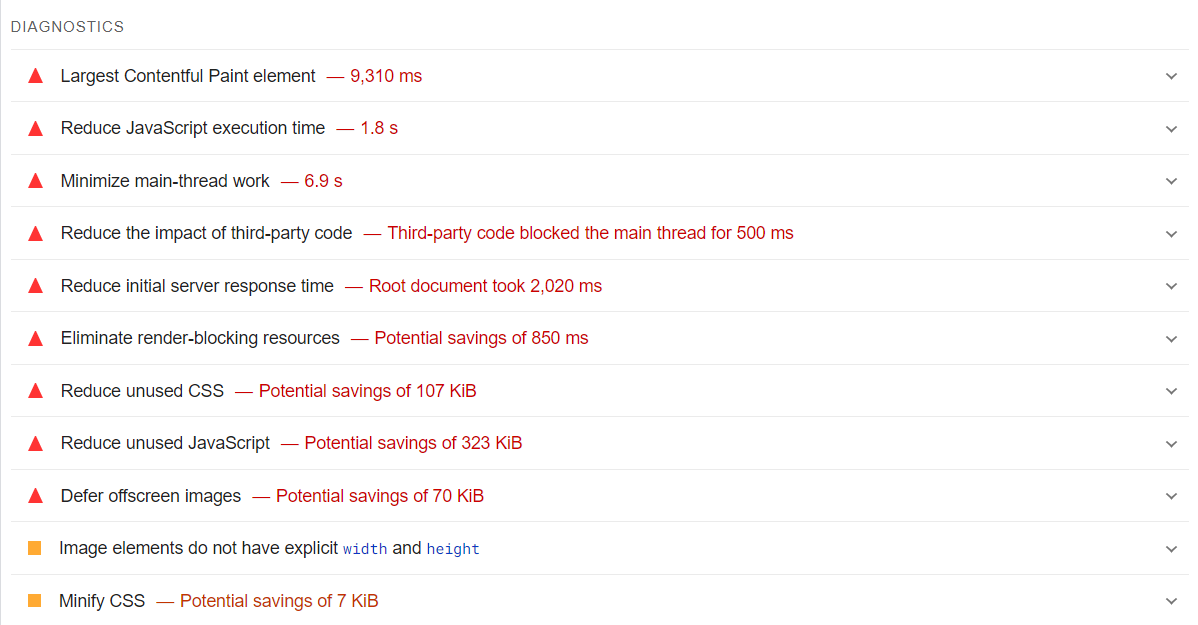

Page Speed: Test desktop and mobile load times using tools like Google PageSpeed Insights and GTmetrix.

- Just visit Google PageSpeed Insights website and analyze your website as shown in the below screenshot.

You can check the issues and try to resolve them with the help of your developer.

Mobile-Friendliness

Mobile-friendliness refers to a website’s ability to adapt and display content effectively on various screen sizes, particularly smartphones and tablets. It ensures a seamless user experience across different devices.

How to Make Your Website Mobile-Friendly

1. Responsive Design

Ensure your website design adapts to different screen sizes and orientations. Use CSS media queries to create a responsive layout that adjusts elements such as images, text, and navigation menus based on the device’s screen size.

2. Fast Loading Times

Optimize your website to load quickly on mobile devices. Compress images, leverage browser caching, minify CSS, JavaScript, and HTML, and use a content delivery network (CDN) to reduce load times. You can use tools like Google PageSpeed Insights to analyze and improve your site’s speed.

3. Simplified Navigation

Make it easy for users to navigate your site on a small screen. Implement a clear and concise navigation menu, use large buttons, and ensure links are easy to tap.

4. Readable Text

Ensure text is readable without zooming. Use legible font sizes (at least 16px) and line heights, and avoid using fixed-width elements.

5. Touch-Friendly Design

Make sure buttons and links are easily tappable. Use appropriately sized touch targets (at least 48px by 48px) and provide sufficient spacing between interactive elements.

6. Optimized Images and Media

Ensure images and videos load quickly and display properly on mobile devices. Use responsive image techniques (srcset), compress images, and use HTML5 video players that adjust to screen sizes.

Security

Website security is a critical aspect of technical SEO. It directly impacts user experience, search engine rankings, and overall website performance.

Key Security Measures for Technical SEO

1. Implement HTTPS

Search engines favor HTTPS websites and HTTPS protects sensitive user information. So, implement HTTPS to encrypt data transmission between your website and users.

2. Regular Software Updates

Keep your website software, plugins, and themes updated with the latest security patches. Outdated software is a common target for hackers.

3. Backup Your Website Regularly

Regularly backup your website data to protect against data loss due to attacks or technical issues.

The Takeaway

Conducting a technical SEO site audit is crucial for identifying and fixing technical issues that could be hindering your website’s performance in search engines. By following the checklist and using the recommended tools, you can ensure your website is technically sound and optimized for search engines. However, if this process seems overwhelming, Ranking Mantra is here to assist you. Our team of digital marketing experts can handle the entire technical SEO audit for you, ensuring that every aspect of your website is thoroughly analyzed and optimized.

FAQs

It’s recommended to conduct a full technical SEO audit at least twice a year. However, for larger websites with frequent updates, more frequent checks may be necessary.

The duration of a technical SEO audit depends on the website’s size and complexity. A small website might take a few hours, while a large e-commerce platform could require several days.

The cost of a technical SEO audit can range from a few hundred to several thousand dollars, depending on the depth of the audit and the expertise of the auditor. Prices may vary based on the size of the website and the specific issues that need to be addressed.

There isn’t a single best tool for SEO audits, as different tools offer unique features. However, popular options include Screaming Frog for site crawling, Google Search Console for indexing and performance insights, and GTmetrix for site speed analysis.

If you’ve experienced a significant drop in organic traffic, have made major website changes, or haven’t performed an audit in over six months, your website likely needs one.

A good SEO audit score varies by tool, but generally, a score above 80% is considered good. However, the focus should be on addressing critical issues rather than achieving a perfect score.

Technical SEO focuses on optimizing the technical aspects of a website to improve its overall performance in search engines. Local SEO, on the other hand, aims to improve visibility for local searches, optimizing the website for location-specific queries and ensuring accurate local listings and reviews.